Volume Snapshots

This article provides a tutorial on creating dynamic persistent volumes using Azure Disks, which can be used by individual pods in an Azure Kubernetes Service (AKS) cluster. It also covers the process of creating a snapshot of the persistent volume.

This article provides a tutorial on creating dynamic persistent volumes using Azure Disks, which can be used by individual pods in an Azure Kubernetes Service (AKS) cluster. It also covers the process of creating a snapshot of the persistent volume.

I have not written this article because there is no documentation on the Internet, but because of its validity. Even if, there are several articles, on the topic, I did not follow a tutorial head-to-tail that would generate the release I expected.

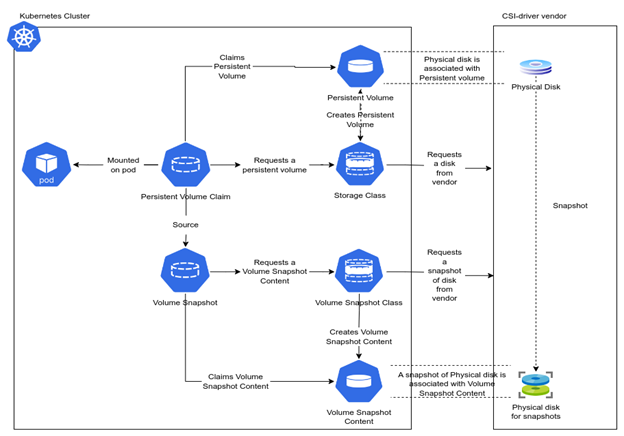

In Kubernetes, a VolumeSnapshot is a snapshot of a volume on a storage system. It captures the state of a volume at a particular moment in time, and allows you to restore the volume to that state later if needed. This feature is particularly useful for data backups where you need to quickly restore your data to a previous state in case of a failure or data loss.

Are you already familiar with Kubernetes persistent volumes ? A persistent volume is a form of storage that has been set aside for utilization with Kubernetes pods. This storage resource can be accessed by one or several pods and can be provisioned dynamically or statically.

1. Dynamically provision a volume

A Persistent Volume Claim (PVC) in Kubernetes can use the StorageClass object to dynamically provision an Azure Disk storage container. The StorageClass defines the properties of the storage resource that will be provisioned, such as the disk type, size, and performance characteristics.

The definition below can be used for the creation of PVC.

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: data-volume

spec:

accessModes:

- ReadWriteOnce

storageClassName: managed-csi

resources:

requests:

storage: 4Gi

volumeMode: Filesystem

Once the persistent volume claim has been created, a pod can be created with access to the disk.

The following manifests

create a basic service called data-service and a basic NGINX deployment

that uses the persistent volume claim named data-volume to

mount the Azure Disk at the path

/data

.

kind:

Service

apiVersion:

v1

metadata:

name: data-service

spec:

ports:

- name: http

protocol: TCP

port: 80

targetPort: 80

selector:

app: data

type: ClusterIP

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: data

name: data-volume-deploy

spec:

replicas: 1

selector:

matchLabels:

app: data

template:

metadata:

creationTimestamp: null

labels:

app: data

spec:

containers:

- image: nginx:alpine

imagePullPolicy: Always

name: nginx

volumeMounts:

- mountPath: /data

name: data-volume-pvc

readinessProbe:

tcpSocket:

port: 80

initialDelaySeconds: 1

timeoutSeconds: 5

periodSeconds: 5

failureThreshold: 13

startupProbe:

tcpSocket:

port: 80

initialDelaySeconds: 1

timeoutSeconds: 5

periodSeconds: 5

successThreshold: 1

failureThreshold: 13

resources:

requests:

memory: 100Mi

cpu: 90m

limits:

memory: 200Mi

cpu: 180m

volumes:

- name: data-volume-pvc

persistentVolumeClaim:

claimName: data-volume

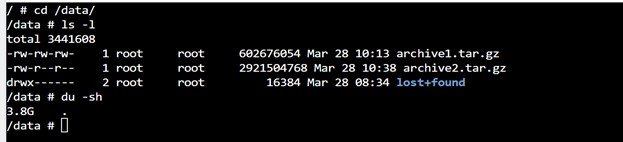

I added two archives to the volume and verified their size:

2. Volume Snapshot

VolumeSnapshotClass is equivalent to storage class in K8s. It is used to define provisioner and different attributes which can be used to create volumesnapshot. It creates VolumeSnapshotContent when a request received through VolumeSnapshot.

VolumeSnapshotContent equivalent to PV. This is a piece of storage which is provisioned by cluster admin or dynamically provisioned by VolumeSnapshotClass to store data from source volume.

VolumeSnapshot is equivalent to PVC. It is a request for storage snapshot made by user.

apiVersion: snapshot.storage.k8s.io/v1beta1

kind: VolumeSnapshotClass

metadata:

name: vsc-azure-csi

labels:

app.kubernetes.io/name: csi

driver: disk.csi.azure.com

deletionPolicy: Delete

parameters:

incremental: "true"

# available values: "true", "false" ("true" by default for Azure Public Cloud)

Snapshots are created

using the

VolumeSnapshots

resource. In it, specify the target PVC

to create a snapshot:

apiVersion: snapshot.storage.k8s.io/v1beta1

kind: VolumeSnapshot

metadata:

name: data-volume-snapshot

labels:

app.kubernetes.io/name: csi

spec:

volumeSnapshotClassName: vsc-azure-csi

source:

persistentVolumeClaimName: data-volume

Check whether the VolumeClass

and

VolumeSnapshot

resources are provisioned correctly.

![]()

Backed up data can be

restored to a managed disk from the Snapshot created above. This is done by

creating a

PersistentVolumeClaim

resource based on an existing

VolumeSnapshot

. CSI provisioner will then create a new

PersistentVolume

from the snapshot.

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: data-volume-restored

labels:

app.kubernetes.io/name: csi

spec:

storageClassName: managed-csi-premium

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 4Gi

dataSource:

name: data-volume-snapshot

kind: VolumeSnapshot

apiGroup: snapshot.storage.k8s.io

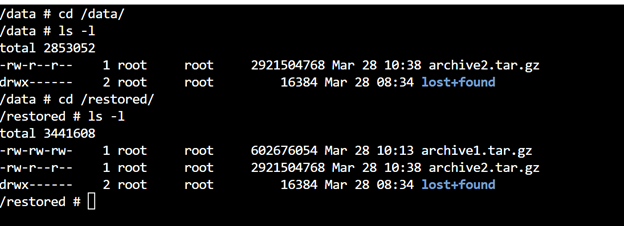

I mounted the newly created PVC on the deployment used in the first section and now I can see both volumes:

/data from where I deleted one of the files, just as an correct example and /restored that holds the files I've backed up.

One interesting thing is that you can restore from the snapshot into a volume with a storageClass different from the storageClass of the initial volume. As an example, storageClassName used for data-volume is managed-csi and storageClassName used for data-volume-restored is managed-csi-premium.

Auto-update helm chart version using ArgoCD

Apr, 2023 Yalos Team

This article shows the automate the update procedure for Helm applications using ArgoCD. I will use a Robusta Helm chart as an example.

read